Every year, nature magazine makes predictions about the technologies to watch in the coming year, and on January 22, nature released seven technology areas to watch this year, three of which are related to synthetic biology. They are:

Deep learning for protein design

Two decades ago, David Baker and his colleagues at the University of Washington in Seattle achieved a landmark feat: They used computational tools to design an entirely new protein from scratch. “Top7” folds as predicted, but it is inert – that is, it does not perform any meaningful biological function.

De novo protein design has matured into a practical tool for generating custom enzymes and other proteins.

“This has huge implications,” says Neil King, a biochemist at the University of Washington who works with Baker’s team to design protein-based vaccines and drug delivery vehicles. “It’s now possible to do something that wasn’t possible a year and a half ago.”

These advances are largely due to the increasingly large data sets linking protein sequences to structures. But sophisticated deep learning methods, a form of artificial intelligence (AI), are also crucial.

The “sequence-based” strategy uses a large language model (LLM), which powers tools such as the chatbot ChatGPT.

By processing protein sequences like documents containing polypeptide “words,” these algorithms can discern patterns behind the architectural manuals of real-world proteins.

“They really learned the hidden grammar,” says Noelia Ferruz, a protein biochemist at the Institute of Molecular Biology in Barcelona, Spain.

In 2022, her team developed an algorithm called ProtGPT2 that consistently produces synthetic proteins that fold stably when produced in the lab.

Another tool co-developed by Ferruz is called ZymCTRL, which uses sequence and functional data to design members of naturally occurring enzyme families.

Sequence-based approaches can build and adapt existing protein features to form new frameworks, but they are less effective for custom design of structural elements or features, such as the ability to bind specific targets in a predictable way.

“Structure-based” approaches are a little better at solving these problems, and 2023 will also see significant progress in this type of protein design algorithm.

Some of the most complex of these models use the “diffusion” model, which is also the basis for image generation tools such as DALL-E.

These algorithms are initially trained to eliminate computer-generated noise from a large number of real structures; By learning to distinguish between the structural elements of reality and noise, they gain the ability to form biologically rational, user-defined structures.

The RFdiffusion software developed in Baker’s lab and the Chroma tool developed by Generate Biomedicines of Somerville, Mass., leverage this strategy to remarkable effect.

For example, Baker’s team is using radiofrequency diffusion to design new types of proteins that can form tight interfaces with targets of interest, resulting in designs that are “fully surface compliant,” Baker said.

RFdiffusion’s updated “all-atom” iteration allows designers to computationally shape proteins around non-protein targets, such as DNA, small molecules, and even metal ions. The resulting versatility opens up new horizons for engineered enzymes, transcriptional regulators, functional biomaterials, and more.

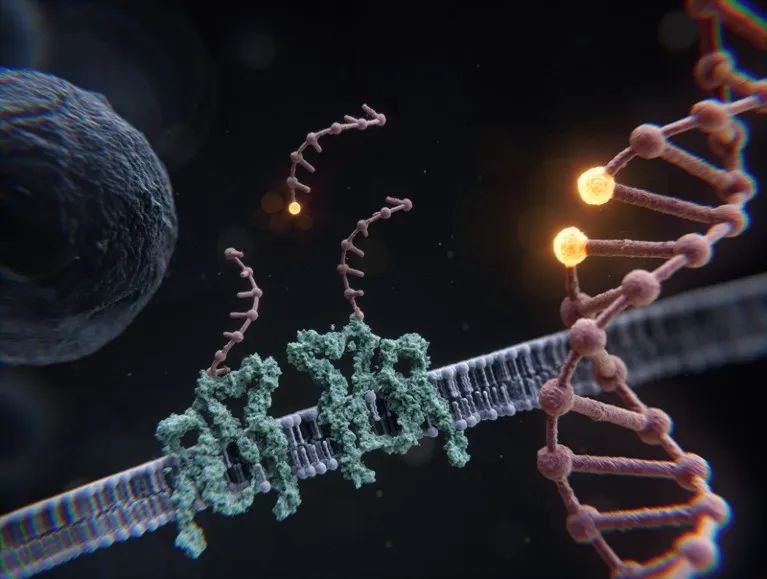

Large fragments of DNA inserted

In late 2023, regulators in the United States and the United Kingdom approved the first CRISPR-based gene editing therapies for the treatment of sickle cell disease and transfusion-dependent beta thalassemia, a major victory for genome editing as a clinical tool.

CRISPR and its derivatives use short, programmable Rnas to direct DNA-cutting enzymes, such as Cas9, to specific genomic sites.

They are often used in the laboratory to disable defective genes and introduce small sequence changes.

Accurately and programmatically inserting larger DNA sequences spanning thousands of nucleotides is difficult, but emerging solutions could allow scientists to replace key segments of defective genes or insert fully functional gene sequences.

Le Cong, a molecular geneticist at Stanford University in California, and his colleagues are exploring single-stranded annealing protein (SSAP), a virus-derived molecule that mediates DNA recombination.

When combined with the CRISPR-Cas system, whose Cas9 DNA slicing function has been disabled, these SSAP can precisely target up to 2 KB of DNA into the human genome.

Other approaches utilize CRISPR-based methods, known as “primer editing,” to introduce short “landing pad” sequences that selectively recruit enzymes to precisely splice large pieces of DNA into the genome.

For example, in 2022, genomic engineers Omar Abudayyeh and Jonathan Gootenberg at the Massachusetts Institute of Technology in Cambridge and their colleagues first described programmable additions via site-specific targeting elements (pastes), This is a method that allows precise insertion of up to 36 KB of DNA.

PASTE is particularly promising for in vitro modification of cultured, patient-derived cells, Cong says, and the underlying Prime editing technology is already on track for clinical studies.

But for in vivo modification of human cells, SSAP may offer a more compact solution: larger PASTE machines require three separate viral vectors for delivery, which may reduce editing efficiency relative to two-component SSAP systems.

That said, even relatively inefficient gene replacement strategies are sufficient to mitigate the effects of many genetic diseases.

These approaches are not only relevant to human health. Researchers led by Caixia Gao of the Chinese Academy of Sciences in Beijing developed PrimeRoot, a method that uses prime editing to introduce specific target sites that enzymes can use to insert up to 20 bases of DNA in rice and corn.

Gao believes that the technology can be widely used to give crops resistance to disease and pathogens, continuing to drive a wave of innovation in CRISPR-based plant genome engineering. “I believe this technique can be applied to any plant species,” she said.

cytogram

If you’re looking for a cafe, you can use Google Maps for directions. There are currently no similar methods to navigate the more complex human landscape, but the continued progress of various cell mapping initiatives, driven by advances in single-cell analysis and “spatial omics” methods, will have profound implications for humans in terms of disease-causing genes, disease mechanism understanding, drug discovery, disease diagnosis, and more.

The largest of these initiatives is the Human Cell Atlas (HCA). The consortium was launched in 2016 by Sarah Teichmann, a cell biologist at the Wellcome Sanger Institute in Hinxton, UK, and Aviv Regev, current head of research and early development at biotech company Genentech in South San Francisco, California.

It is made up of about 3,000 scientists from nearly 100 countries and works using tissue from 10,000 donors. But HCA is also part of a broader ecosystem of cross-cellular and molecular mapping work.

These include the Human Biomolecular Mapping Program (HuBMAP) and BRAIN Research (BICCN) through the Advancing Innovative NeuroTechnologies (BRAIN) Initiative Cell Screening Network (BICCN), both funded by the National Institutes of Health, And the Allen Brain Cell Atlas (funded by the National Institutes of Health at the Allen Institute in Seattle, Washington).

Michael Snyder, a Stanford University genomicist and former co-chair of HuBMAP’s steering committee, said these efforts are in part driving the development and rapid commercialization of analytical tools to decode molecular content at the single-cell level.

For example, Snyder’s team regularly uses 10X Genomics’ Xenium platform in Pleasanton, California, for spatial transcriptomic analysis. The platform can investigate the expression of approximately 400 genes simultaneously in four tissue samples per week.

Another example: the PhenoCycler platform at Akoya Biosciences in Marlborough, Massachusetts, which uses multi-antibody based methods that allow the team to track large amounts of proteins at single-cell resolution in a format that supports 3D tissue reconstruction.

Other “multiomics” methods allow scientists to simultaneously analyze multiple molecular classes in the same cell, including RNA expression, chromatin structure, and protein distribution.

Last year, dozens of studies demonstrated progress in using these techniques to generate organ-specific maps. In June, for example, the HCA released a comprehensive analysis of 49 data sets on the human lung. “With a very clear map of the lungs, it’s possible to understand what’s happening with pulmonary fibrosis, different tumors, and even diseases like COVID-19,” Teichmann said. In 2023, Nature published a collection of articles (see 1) highlighting HuBMAP’s progress, and Science produced a collection of articles detailing BICCN’s work (see 2).

There is still a lot of work to done, and Teichmann estimates that HCA will take at least five years to complete. But the final map will be invaluable upon arrival.

For example, Teichmann predicts using atlas data to guide tissue – and cell-specific drug targeting, while Snyder is eager to understand how the cellular microenvironment informs the risk and causes of complex diseases such as cancer and irritable bowel syndrome.

“We may not be able to solve this problem in 2024, it’s a multi-year problem, but there’s no denying it’s a big driver for the whole field.” “Snyder said.

Other technologies to watch

Brain-computer interface

Pat Bennett speaks more slowly than the average person and sometimes uses the wrong words. But given that motor neurone disease, also known as amyotrophic lateral sclerosis, had previously left her unable to express herself verbally, it was a remarkable achievement.

Bennett’s recovery made possible by a complex brain-computer interface (BCI) device developed by Stanford neuroscientist Francis Willett and his colleagues at the US BrainGate Consortium.

Willett and his colleagues implanted electrodes in Bennett’s brain to track neuronal activity, then trained deep learning algorithms to translate those signals into speech.

After weeks of training, Bennett was able to say as many as 62 words per minute and had a vocabulary of 125,000 words, more than double the vocabulary of the average English speaker.

“The speed of their communication is really impressive,” says Jennifer Collinger, a bioengineer at the University of Pittsburgh in Pennsylvania who developed BCI technology.

BrainGate’s trial is just one of several studies in the past few years showing how brain-computer interface technology can help people with severe neurological damage regain lost skills and gain greater independence.

Leigh Hochberg, a neuroscientist at Brown University in Providence, Rhode Island, and director of the BrainGate Consortium, said some of these advances stem from a growing knowledge of the functional neuroanatomy in the brains of individuals with various neurological disorders.

But he adds that this knowledge has been greatly amplified by machine learning-driven analytical methods that reveal how to better place electrodes and decrypt the signals they receive.

The researchers also applied AI-based language models to speed up the interpretation of what the patient was trying to communicate – essentially “auto-completion” by the brain.

This is a central part of Willett’s study, as well as 11 other studies by a team led by UC San Francisco neurosurgeon Edward Chang.

In this work, the BCI neuroprosthesis allowed a woman who had been unable to speak due to a stroke to communicate at 78 words per minute – about half the average speed of English, but more than five times faster than the woman’s previous speech assistance device.

Progress has made in other areas as well. In 2021, Klinger and Robert Gaunt, a biomedical engineer at the University of Pittsburgh, implanted electrodes into the motor and somosensory cortices of quadriplegics to provide fast, precise control of the robotic arm as well as haptic feedback.

Separate clinical studies by researchers at BrainGate and UMC Utrecht in the Netherlands and a trial by BCI company Synchron in Brooklyn, New York, are also underway to test a system that allows paralyzed people to control computers – the first of its kind. Industry-sponsored testing of BCI devices.

As a critical care specialist, Hochberg is eager to make these technologies available to patients with the most severe disabilities. But with the development of brain-computer interface capabilities, he sees potential for treating mental health conditions such as moderate cognitive impairment and mood disorders. “A closed-loop neuromodulation system with information provided by a brain-computer interface could be a huge help to a lot of people,” he said.

Deep face change detection

The explosion of publicly available generative AI algorithms has made it simple to synthesize convincing but entirely artificial images, audio, and video. The outcome could be an interesting distraction, but with multiple geopolitical conflicts ongoing and the US presidential election approaching, opportunities for weaponized media manipulation abound.

Siwei Lyu, a computer scientist at the University at Buffalo in New York, said he has seen a large number of “deep fake” images and audio generated by artificial intelligence related to the Israel-Hamas conflict.

This is just the latest round in a high-stakes cat-and-mouse game in which AI users produce deceptive content, and Lyu and other media forensics experts work to detect and intercept it.

One solution is for generative AI developers to embed hidden signals in the output of the model, thereby generating a watermark of the AI-generated content. Other strategies focus on the content itself.

For example, some of the processed videos replace the facial features of one public figure with those of another, and the new algorithm can identify artefacts at the boundaries of the replaced features.

The unique folds of a person’s outer ears can also reveal mismatches between the face and head, while tooth irregularity can reveal edited mouth sync videos in which a person’s mouth digitally manipulated to say things the subject not saying.

Ai-generated photos also present a tricky challenge – and a moving target.

In 2019, Luisa Verdoliva, a media forensics expert at Federico II University in Naples, Italy, helped develop FaceForensics++, a facial recognition tool manipulated by several widely used software packages. But image forensics methods are subject – and software-specific, and generalization is a challenge.

Then there are the implementation challenges. The Defense Advanced Research Projects Agency’s Semantic Forensics (SemaFor) program has developed a useful toolbox for deep forgery analysis, but, as reported in Nature (see Nature 621, 676-679; 2023), which not routinely used by major social media sites.

Expanding access to such tools could help boost people’s use, and to that end, Lyu’s team developed Deepfake-o-Meter, a centralized public algorithm repository that analyzes video content from different angles to sniff out Deepfake content.

Super resolution microscope

Stefan Hell, Eric Betzig and William Moerner have won the 2014 Nobel Prize in Chemistry for breaking the “diffraction limit” that limits the spatial resolution of optical microscopes.

The resulting level of detail (on the order of tens of nanometers) opens up a wide range of molecular-scale imaging experiments.

Still, some researchers are hungry for better results – and they’re making progress quickly.

“We are really trying to close the gap between super-resolution microscopy and structural biology techniques such as cryomicroscopy,” said Ralf Jungmann, a nanotechnology researcher at the Max Planck Institute for Biochemistry in Planag, Germany. He was referring to a method that could reconstruct protein structures at atomic level resolution.

Researchers led by Hell and his team at the Max Planck Institute for Multidisciplinary Science in Gottingen first stepped into this field in late 2022, using a method called MINSTED, which uses specialized light microscopes that can resolve individual fluorescent markers with an accuracy of 2.3 angstrome (about a quarter of a nanometer).

Newer methods can provide resolution comparable to conventional microscopes. For example, Jungmann and his team described a strategy in 2023 in which individual molecules labeled with different strands of DNA.

These molecules then examined with dye-labeled complementary DNA strands that briefly but repeatedly bind to their respective targets, allowing the identification of individual fluorescent “flicker” dots that blur into single spots if imaged simultaneously.

This method of enhanced resolution by sequential imaging (RESI) can resolve individual base pairs on DNA strands, thereby demonstrating angstrom resolution with standard fluorescence microscopy.

The one-step nanoscale scaling (ONE) microscopy method developed by a team led by neuroscientists Ali Shaib and Silvio Rizzoli at the University Medical Center in Gottingen, Germany, does not quite reach this level of resolution. However, the ONE microscope offers unprecedented opportunities to directly image fine structural details of individual protein and multi-protein complexes in isolated and cellular environments.

RESI’s artist’s impression showing strands of DNA attached to cell membrane proteins and DNA highlighting two adjacent bases

ONE is a dilatation-microscope-based approach that involves chemically coupling proteins in a sample to a hydrogel matrix, breaking the proteins apart, and then expanding the volume of the hydrogel by a factor of 1,000.

These fragments expand evenly in all directions, preserving the protein structure and enabling users to resolve features several nanometers apart using standard confocal microscopy.

“We take the antibodies, put them in a gel, label them after expansion, and say, ‘Oh – we see a Y shape!'” Rizzoli said, referring to the protein’s characteristic shape.

Rizzoli says a microscope could provide insight into conformationally dynamic biomolecules, or be able to visually diagnose protein misfolding diseases such as Parkinson’s disease from blood samples. Jungmann is equally enthusiastic about RESI’s potential to document individual protein recombination or drug therapy response in disease. It can be magnified even more closely. “Maybe this isn’t the end of spatial resolution limitations,” Jungmann said. “Things could get better.”

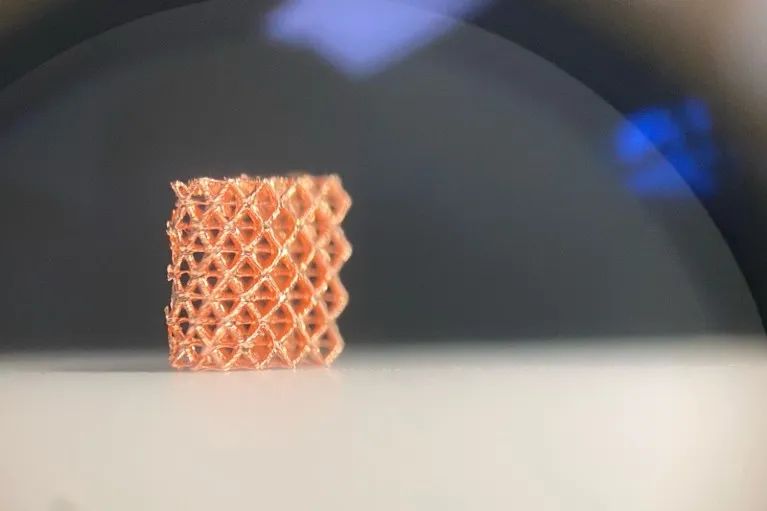

3D printing of nanomaterials

Strange and interesting things can happen at the nanoscale. This can make material science predictions difficult, but it also means that nanoscale architects can make lightweight materials with unique properties, such as increased strength, customized interactions with light or sound, and enhanced catalytic or energy storage capabilities.

Multiple strategies for precisely manufacturing such nanomaterials exist, most of which use lasers to induce patterned “photopolymerization” of photosensitive materials, and over the past few years, scientists have made considerable progress in overcoming limitations that have prevented wider adoption of these methods.

A miniature lattice structure made of copper using 3D printing technology

One is speed. Sourabh Saha, an engineer at the Georgia Institute of Technology in Atlanta, said that assembling nanostructures using photopolymerization is about three orders of magnitude faster than other nanoscale 3D printing methods.

This may be good enough for laboratory use, but too slow for mass production or industrial processes.

In 2019, Saha and mechanical engineer Shih-Chi Chen of the Chinese University of Hong Kong and colleagues showed that they could speed up polymerization by using patterned 2D light sheets instead of traditional pulsed lasers. “That increases the rate a thousand-fold, and you still keep those 100-nanometer characteristics,” Saha said. Follow-up work by researchers including Chen has identified other pathways for faster nanofabrication.

Another challenge that not all materials can printed directly through photopolymerization – metals, for example. But Julia Greer, a materials scientist at the California Institute of Technology in Pasadena, has developed an ingenious solution.

In 2022, she and her colleagues described a method using photopolymeric hydrogels as miniature templates; They then injected into metal salts and treated in a way that induces the metal to present the template structure while shrinking.

Although the technology originally developed for micron-scale structures, Greer’s team has also applied this strategy to nanofanufacturing, and the researchers are enthusiastic about the potential to make functional nanostructures out of strong, high-melting metals and alloys.

The final barrier, economics, may be the hardest to break. According to Saha, pulsed laser-based systems used in many photopolymerization methods cost up to $500,000.

But cheaper alternatives are emerging. For example, physicist Martin Wegener of the Karlsruhe Institute of Technology in Germany and his colleagues explored continuous lasers that are cheaper, more compact, and consume less power than standard pulsed lasers.

Greer has also founded a startup to commercialize the manufacturing process for nanostructured metal plates that could be adapted for applications such as next-generation body armor or ultra-durable and impact-resistant outer layers for aircraft and other vehicles.

Source of information:

- [1] https://www.nature.com/collections/aihihijabe

- [2] https://www.science.org/collections/brain-cell-census

- [3] https://www.nature.com/articles/d41586-024-00173-x